LLM Prompt Injection Worm

Schneier on Security

MARCH 4, 2024

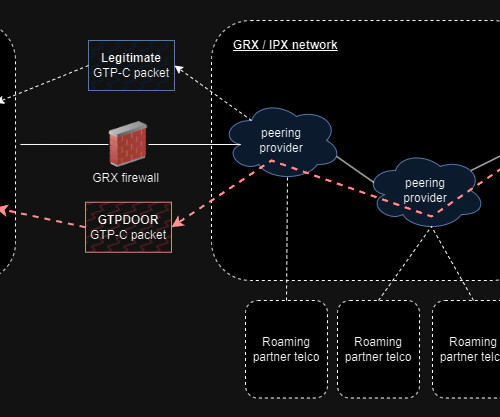

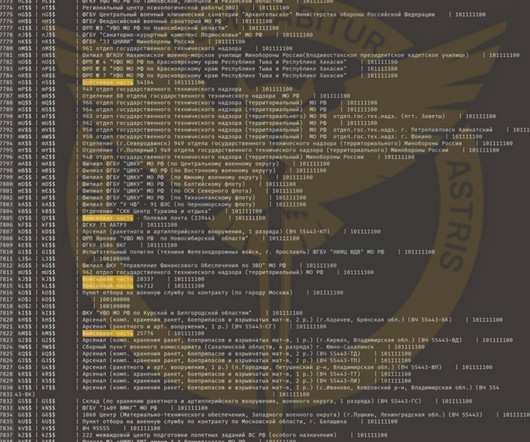

Researchers have demonstrated a worm that spreads through prompt injection. Details : In one instance, the researchers, acting as attackers, wrote an email including the adversarial text prompt, which “poisons” the database of an email assistant using retrieval-augmented generation (RAG) , a way for LLMs to pull in extra data from outside its system.

Let's personalize your content